Coming Soon!RoboToolBench

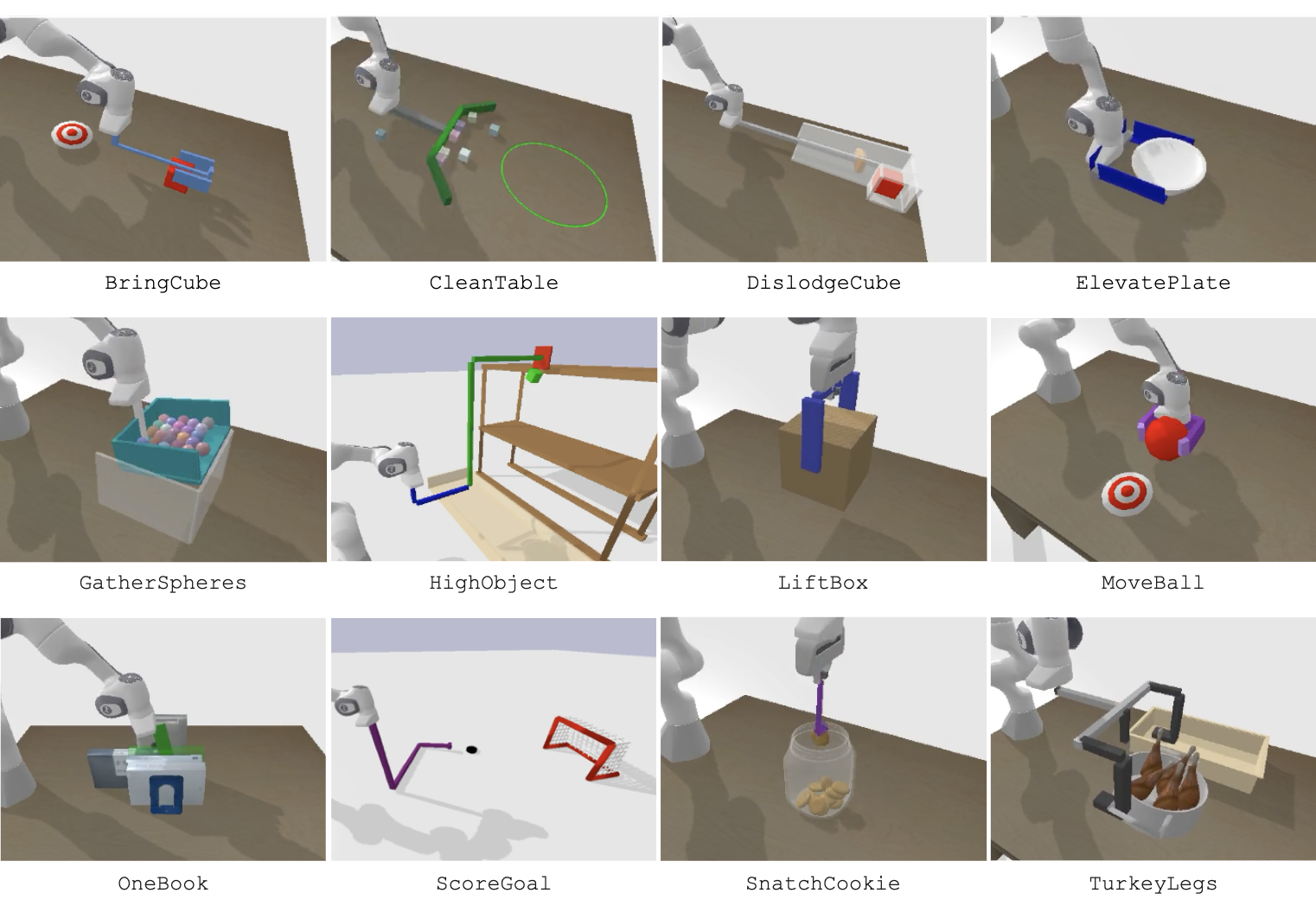

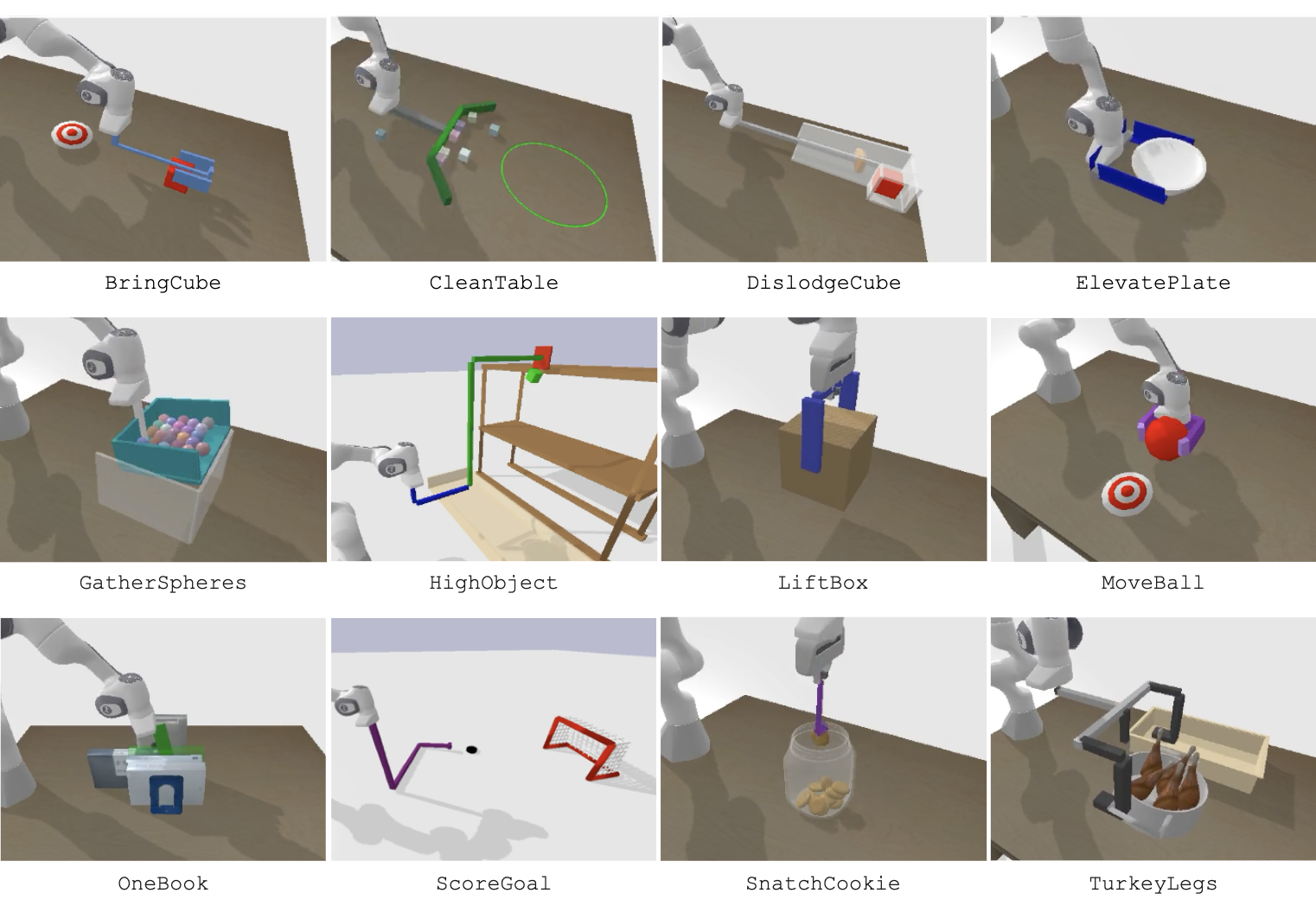

VLMgineer produces innovative tool designs and their corresponding actions across 12 diverse tasks in RoboToolBench that are challenging to perform using a general-purpose robot arm and gripper.

Tool design and use reflect the ability to understand and manipulate the physical world through creativity, planning, and foresight. As such, it is often regarded as a measurable indicator of cognitive intelligence across biological species. While much of today's research on robotics intelligence focuses on generating better control strategies, inventing smarter tools offers a complementary form of physical intelligence: moving the problem-solving onus into the tool's geometry so that control becomes simpler.This motivates us to ask: can today's foundation models offer useful priors to automatically invent—and effectively wield—such tools? We present VLMgineer, a framework that harnesses the creativity of Vision–Language Models (VLMs) together with evolutionary search to co-design physical tools and the control policies that operate them. We evaluate VLMgineer on a diverse benchmark of everyday manipulation scenarios that demand creative tool design and use. Across this suite, VLMgineer consistently discovers tools and policies that solve tasks more effectively and innovatively, transforming challenging robotics problems into straightforward executions. It also consistently outperforms VLM-generated designs from human specifications and existing human-crafted tools for everyday tasks. To facilitate future research on automated tool invention, we will release our benchmark and code.

VLMgineer takes unmodified environment source code, environment image, environmental description, and task description as context to zero-shot generate tool and action designs from a VLM. It then iteratively refines its tool and action designs through a loop of candidate sampling, simulation-based evaluation, and evolution improvement.

VLMgineer produces innovative tool designs and their corresponding actions across 12 diverse tasks in RoboToolBench that are challenging to perform using a general-purpose robot arm and gripper.

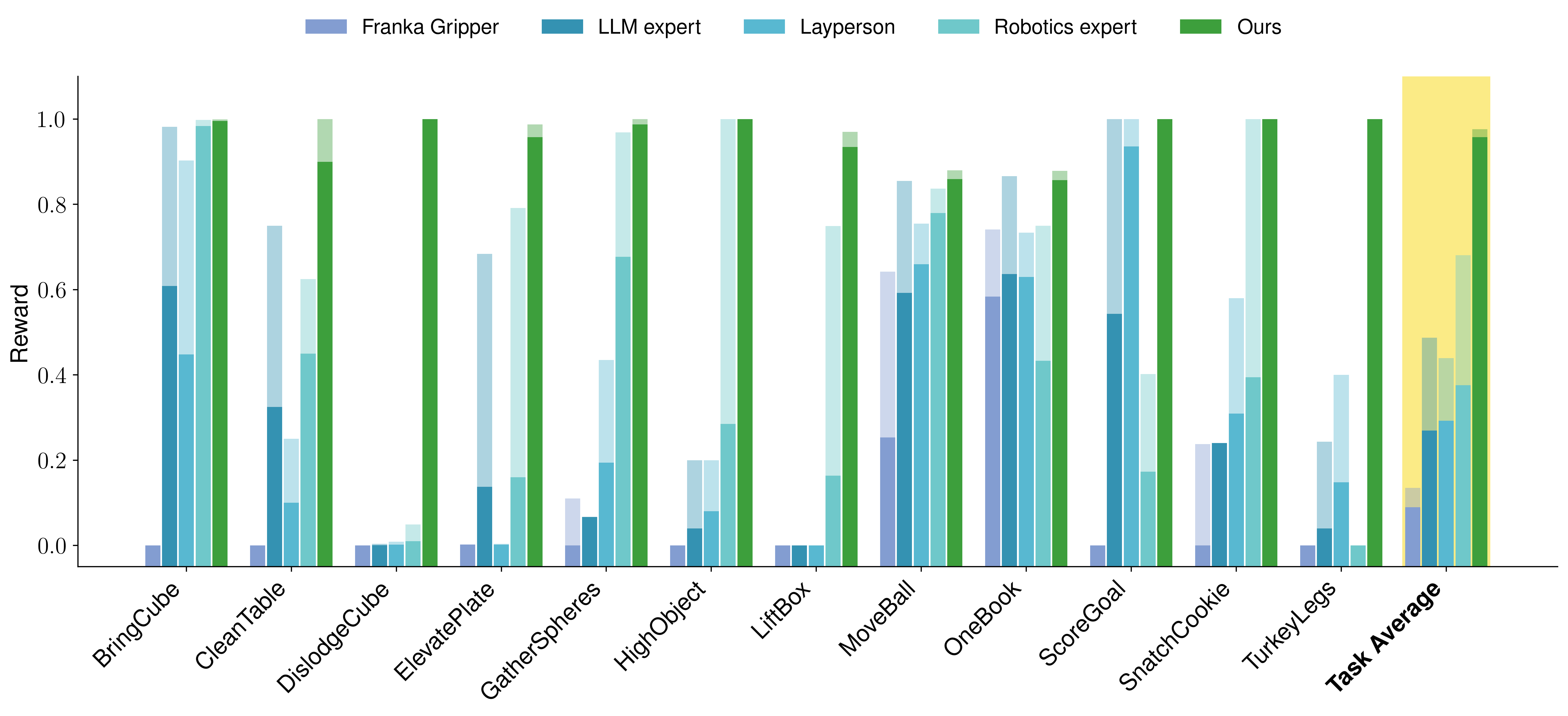

This figure compares the reward of Franka Gripper experiments, 3 Human Prompt experiments, and experiments on our proposed method across 12 tasks. For every method, the bars with the original dark color in the legend indicate the average reward of the five runs, while the bars with a paler color visible above them indicate the best reward over those runs.

VLMgineer works consistently well across tasks, in terms of both average and best rewards. We dive into interesting individual method comparisons now. As expected, the default Franka Panda two-finger gripper fails on the majority of these tasks. What is perhaps more noteworthy is that VLMgineer outperforms human-prompting! This is true across all tasks on both metrics, showing better and more reliable performance. While human prompts occasionally produced strong solutions, their results were less consistent and efficient. In tasks like CleanTable and ScoreGoal, both approaches reached similar peak rewards, but our method did so with significantly shorter paths.

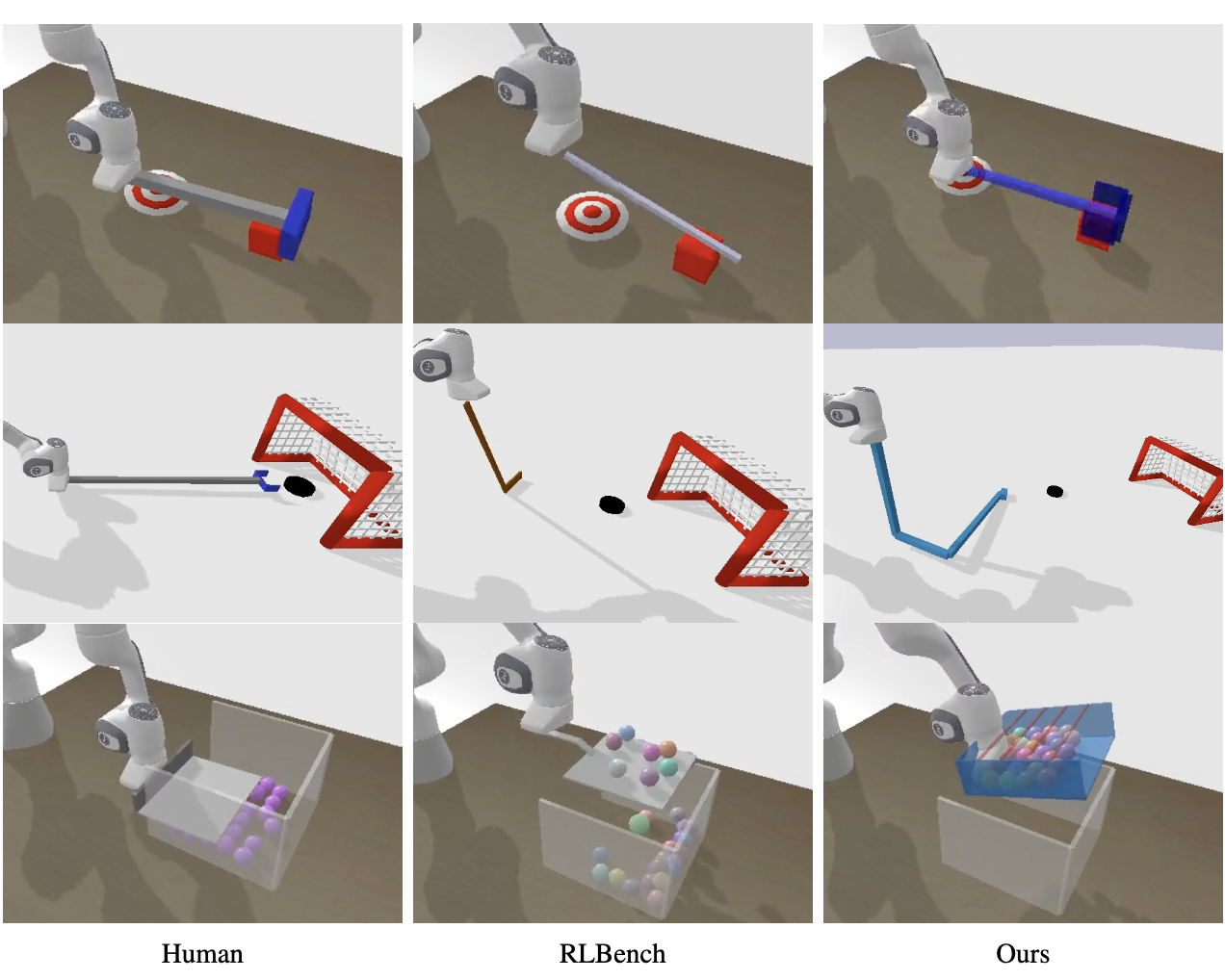

This figure presents a qualitative comparison of human-designed tools, RLBench tools, and VLMgineer tools on three tasks: BringCube (top row), ScoreGoal (middle row), and GatherSpheres (bottom row). Human-designed tools (left column) generally offer suitable forms for task completion; however, VLMgineer (right column) creates more specialized features that enhance performance. For instance, in task ScoreGoal, our method produces long and bent shapes facilitating simpler, more efficient motions, which the robot just needs to move very little along one axis to hit the puck. On the other hand, the straight tool designed from human prompts would require more careful control of the puck. In GatherSphere, our design includes a scoop with side protection and an overhead stripe structure, effectively preventing spheres from bouncing away.

Coming Soon!